Introduction

The realm of programming languages has seen the rise of many contenders, each offering unique advantages and capabilities to developers. Two languages that have gained significant attention and popularity in recent years are GoLang (often referred to as Go) and Rust. Both are powerful, modern languages designed to tackle various challenges in software development, making them popular choices for building robust and efficient applications. In this article, we will delve deep into the characteristics of GoLang and Rust, comparing their features, performance, use cases, and community support, ultimately determining which one emerges victorious in this programming language showdown.

A Brief Overview of GoLang and Rust

GoLang: GoLang, developed by Google in 2007, has gained immense traction due to its simplicity, ease of use, and fast compilation times. Its concise syntax and garbage collection mechanism have made it an ideal choice for building web servers, networking tools, and cloud-based applications. GoLang’s built-in concurrency features, including goroutines and channels, enable developers to create highly scalable and concurrent programs with relative ease.

Rust: Rust, on the other hand, emerged from Mozilla Research and was released in 2010. It has quickly risen through the ranks, becoming popular for its focus on memory safety, zero-cost abstractions, and fearless concurrency. Rust’s borrow checker and ownership model provide robust memory safety guarantees, making it an excellent option for systems-level programming, embedded devices, and performance-critical applications.

Performance and Efficiency

GoLang: GoLang’s design prioritizes simplicity and readability, making it ideal for quick prototyping and easy maintenance. Its garbage collection system automates memory management, reducing the burden on developers. However, this convenience comes at the cost of runtime performance, making GoLang less suited for extremely resource-intensive applications.

Rust: Rust, with its emphasis on zero-cost abstractions and explicit memory management, achieves remarkable performance. It boasts a sophisticated borrow checker, preventing data races and null pointer dereferences at compile time. While this leads to more verbose code and a steeper learning curve, Rust’s safety guarantees make it an appealing choice for high-performance applications where efficiency is paramount.

Concurrency and Parallelism

GoLang: One of GoLang’s standout features is its first-class support for concurrency through goroutines and channels. This makes it exceptionally easy to write concurrent programs that effectively utilize multiple CPU cores, leading to scalable and efficient applications. GoLang’s “Do not communicate by sharing memory; instead, share memory by communicating” approach simplifies concurrent programming for developers.

Rust: Rust also embraces concurrent programming with its “fearless concurrency” model. It utilizes the ownership system to ensure thread safety, and its async/await feature enables developers to write asynchronous code that efficiently utilizes system resources. While not as straightforward as GoLang’s approach, Rust’s concurrency capabilities provide strong safety guarantees and performance benefits for complex systems.

Community and Ecosystem

GoLang: GoLang’s popularity has grown significantly over the years, thanks to its simplicity and suitability for modern application development. The Go ecosystem offers a wide range of libraries and packages, making it easier for developers to build various types of applications. Its large community and strong support from Google ensure that GoLang will continue to evolve and improve.

Rust: Rust has also seen a substantial increase in popularity, particularly among developers who prioritize memory safety and performance. Its growing ecosystem includes a diverse set of libraries and tools, making it increasingly attractive for a wide range of projects. Rust’s community is known for its friendliness and willingness to help newcomers, contributing to the language’s success.

Conclusion

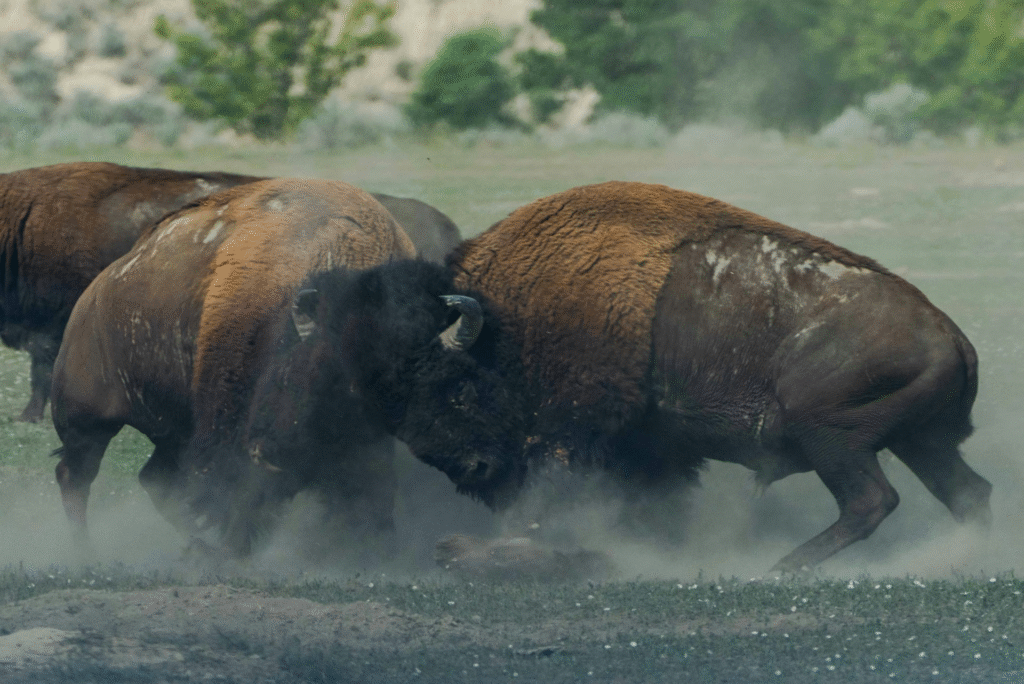

In the battle between GoLang and Rust, there is no clear winner—it all depends on the specific requirements of the project and the preferences of the developers involved. GoLang excels in simplicity, ease of use, and concurrent programming, making it a top choice for web-based applications and networking tools. On the other hand, Rust shines when it comes to memory safety, performance, and system-level programming, making it ideal for projects that require utmost efficiency and security.

Ultimately, both GoLang and Rust have carved out significant niches in the programming language landscape, and their growing communities and ecosystems ensure they will remain relevant and continue to improve. Developers should carefully assess their project’s needs, team experience, and long-term goals before deciding between these two powerful languages. As the programming world continues to evolve, it is likely that GoLang and Rust will continue to be at the forefront of innovation and progress, pushing the boundaries of what is possible in software development.